Deploying Kafka Clusters with TLS on Kubernetes using Koperator and Helm¶

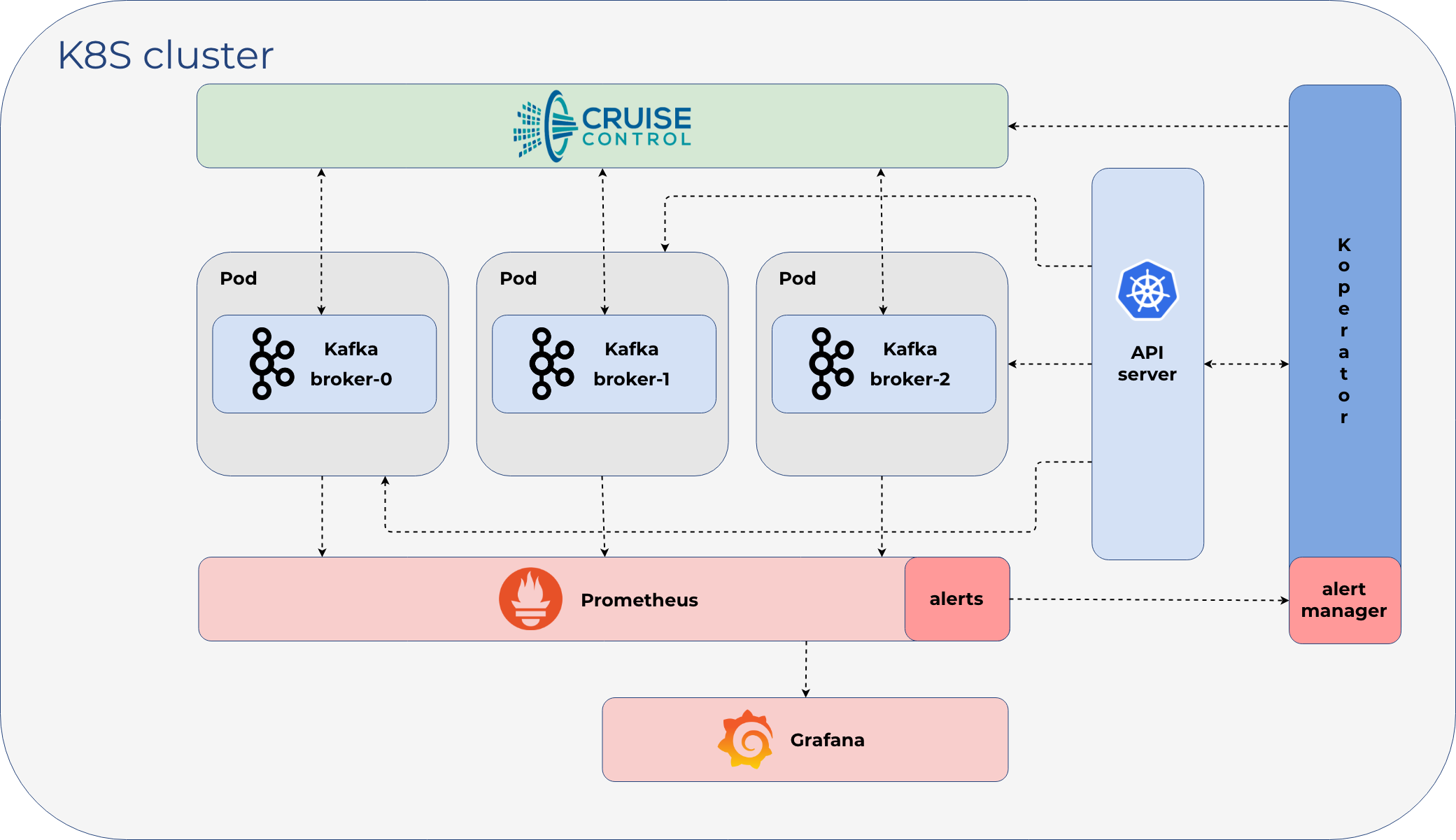

The CNCF Landscape is a great resource for anything you want to run in kubernetes. There’s many streaming and messaging options, and today I want to deploy a tls-secured Kafka cluster using Koperator.

Prerequisites¶

Choosing Kubernetes means your organization is signing up for an ever-changing ecosystem, and this guide will likely be dated in the coming years.

Minikube¶

I’m running a minikube cluster on kubernetes 1.24.3 with 48 CPUs and 128 GB ram.

minikube profile list -o json | jq -r '.valid[0].Config | "cpus: " + (.CPUs | tostring) + " memory: " + (.Memory | tostring)'

cpus: 48 memory: 128000

kubectl get nodes | grep minikube

minikube Ready control-plane 13d v1.24.3

Helm¶

I’m using helm 3.9.3:

helm version --short

v3.9.3+g414ff28

Getting Started¶

Zookeeper¶

Note

Previously Kafka helm charts bundled Zookeeper into the same helm chart as the Kafka cluster, but Koperator does not recommend that approach. Instead you have to deploy and manage Zookeeper separately.

Install¶

Note

By default the Zookeeper operator will manage any zookeepercluster objects in any namespace

helm repo add pravega https://charts.pravega.io

helm repo update

helm upgrade --install zookeeper-operator --namespace=zookeeper --create-namespace pravega/zookeeper-operator

Output:

"pravega" has been added to your repositories

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "pravega" chart repository

Update Complete. ⎈Happy Helming!⎈

NAME: zookeeper-operator

LAST DEPLOYED: Wed Sep 7 14:15:22 2022

NAMESPACE: zookeeper

STATUS: deployed

REVISION: 1

TEST SUITE: None

Deploy the Zookeeper Cluster¶

kubectl create --namespace zookeeper -f - <<EOF

apiVersion: zookeeper.pravega.io/v1beta1

kind: ZookeeperCluster

metadata:

name: zookeeper

spec:

replicas: 1

EOF

Check the Zookeeper Cluster¶

kubectl get -n zookeeper zookeeperclusters

NAME REPLICAS READY REPLICAS VERSION DESIRED VERSION INTERNAL ENDPOINT EXTERNAL ENDPOINT AGE

zookeeper 1 0.2.13 10.110.178.100:2181 N/A 31s

Make sure to wait for the READY REPLICAS to finish updating:

kubectl get -n zookeeper zookeeperclusters

NAME REPLICAS READY REPLICAS VERSION DESIRED VERSION INTERNAL ENDPOINT EXTERNAL ENDPOINT AGE

zookeeper 1 1 0.2.13 0.2.13 10.110.178.100:2181 N/A 102s

Install Koperator with Helm¶

Deploy the Koperator into the Kafka namespace with Managed CRDs¶

For more customizations, please refer to the Koperator helm chart for more details

helm repo add banzaicloud-stable https://kubernetes-charts.banzaicloud.com/

helm repo update

helm upgrade --install kafka-operator --set crd.enabled=true --namespace=kafka --create-namespace banzaicloud-stable/kafka-operator

Output:

Update Complete. ⎈Happy Helming!⎈

Release "kafka-operator" does not exist. Installing it now.

NAME: kafka-operator

LAST DEPLOYED: Wed Sep 7 15:22:24 2022

NAMESPACE: kafka

STATUS: deployed

REVISION: 1

TEST SUITE: None

Deploy a Kafka cluster with NodePorts¶

If you do not need kubernetes NodePort access to the Kafka cluster, then you can use the default guide’s cluster yaml:

kubectl create -n kafka -f https://raw.githubusercontent.com/jay-johnson/koperator/nodeport-with-headless-example/config/samples/simplekafkacluster-with-nodeport.yaml

Monitoring¶

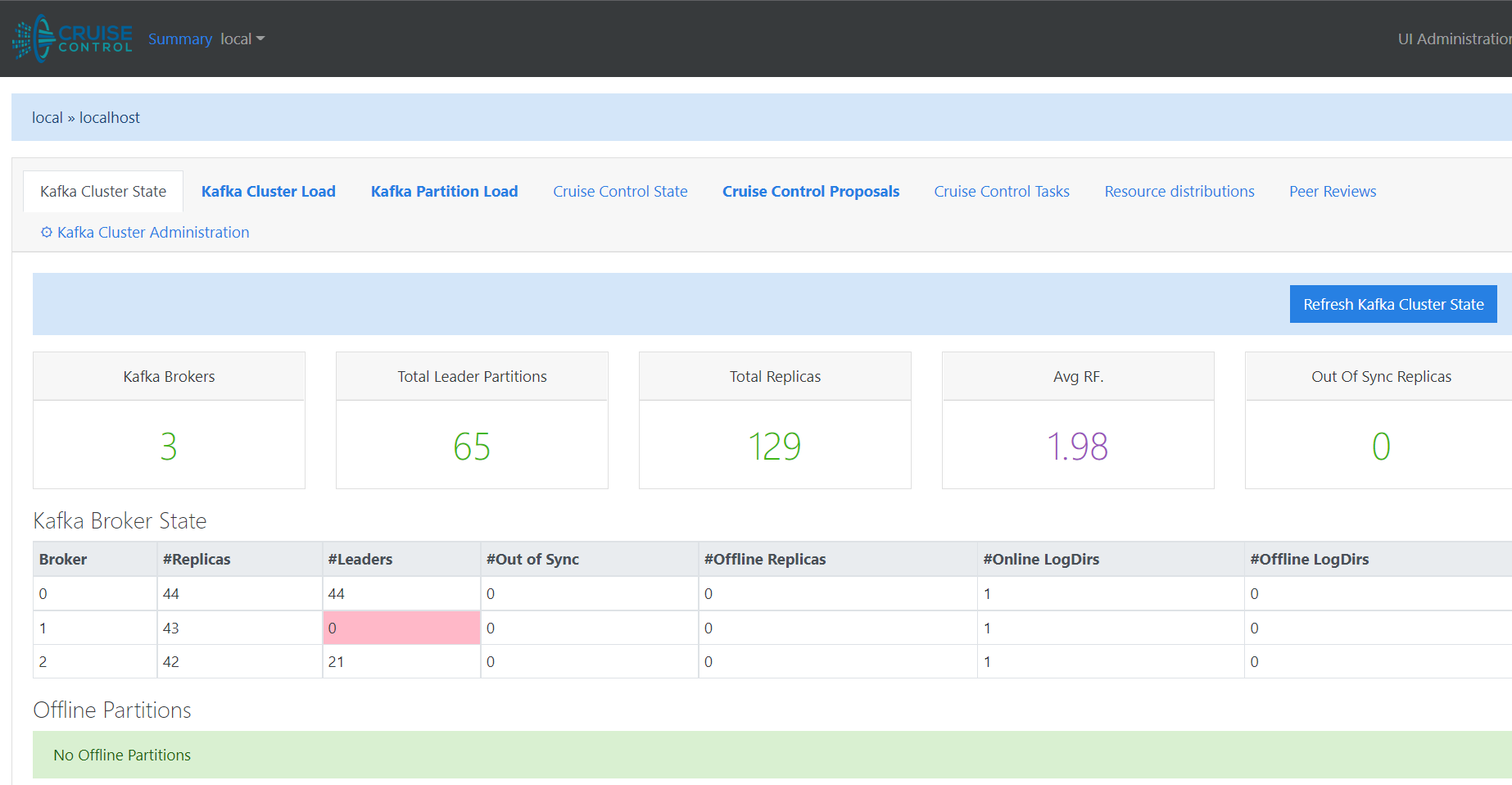

Cruise Control¶

Kubectl Port-Forward¶

Note

This is not a secure way to host for production but it works for demonstration purposes:

kubectl port-forward -n kafka svc/kafka-pr-cruisecontrol-svc --address 0.0.0.0 8090:8090

Troubleshooting¶

Check for errors¶

Did Cruise Control hit

OOMKilled?kafka-cruisecontrol-6fdb5757f6-hknm4 0/1 OOMKilled 0 42s

Add this to your cluster’s yaml under the

cruiseControlConfigsection:cruiseControlConfig: resourceRequirements: limits: cpu: 3000m memory: 3000Mi requests: cpu: 1000m memory: 1000Mi

Note

I also had to fully delete the Kafka cluster and the Koperator to get this change to work.

Why does the CruiseControl pod never enter the

Runningstate?As of 2022-09-07, Koperator is not using StatefulSets for the Kafka pods. Instead Koperator is immediately starting up the Kafka cluster pods on deployment without letting them start one at a time (which is how most other clusterable db/messaging technologies start up on kubernetes). This Koperator architecture decision sometimes causes 1, 2 or even all 3 pods to automatically restart on start up too. When one of these Kafka cluster pods restarts, the dedicated kubernetes service(s) for that pod also get recreated too. When the services get recreated like this, it appears to cause downstream networking issues where 1 or 2 of the other

RunningKafka pods can no longer reach the newly-restarted pod using the newly-recreated Kafka kubernetes service. Hopefully no other Koperator users are hitting this start up issue outside of a minikube testing environment. After watching my minikube Kafka cluster deployments hang time after time, I have found this deployment order results in a cleanly-running cluster that I don’t have to debug each time:Deploy Koperator (one time operation)

Delete any previously auto-generated tls secrets created by Koperator

kubectl delete secret -n dev dev-kafka-controller dev-kafka-server-certificate

Deploy the Kafka cluster

Wait for 30 seconds

Restart the Kafka cluster pods one at a time with a 30 second gap between each pod restart

Wait for CruiseControl to enter the

Runningstate

Why can’t I use my own tls certificates, keys and CA?

I tried for two days to inject already-existing tls assets using the 3 supported, documented ssl attributes for the Kafka cluster (

sslSecrets,serverSSLCertSecret, andclientSSLCertSecretfrom the Banzai docs), and each time I saw Koperator create new tls keys, certificates and CA for my internal and external listeners (openssl s_client -connectmade it easy to see theCNandSANswere not mine). Sadly, I never gotclientSSLCertSecretto load/work at all, and Koperator would log a cryptic tls error message before deploying the Kafka cluster if I tried to useclientSSLCertSecret.I found it easier to configure the Kafka cluster to generate its own keys, certificates and CA then extract them from the cluster. Once I have the tls assets outside the kubernetes cluster, then I can start hooking up my next blog article about using Rust TLS with Kafka.

Here’s my Kafka cluster config listening on external TCP NodePorts 32000-32002 with tls and reduced logging:

Extract Auto-Generated TLS Assets from an already-running Kafka cluster pod

kubectl get secret -n dev -o yaml dev-kafka-server-certificate | grep tls.key | awk '{print $NF}' | base64 -d > tls.key kubectl get secret -n dev -o yaml dev-kafka-server-certificate | grep tls.crt | awk '{print $NF}' | base64 -d > tls.crt kubectl get secret -n dev -o yaml dev-kafka-server-certificate | grep ca.crt | awk '{print $NF}' | base64 -d > ca.crt

Verify TLS works with OpenSSL on the Kafka NodePort

openssl s_client -connect dev-kafka-all-broker.dev.svc.cluster.local:32000 -key ./tls.key -cert ./tls.crt -CAfile ./ca.crt -verify_return_error

Note

If you do not have a real dns set up, then please ensure

/etc/hostsassigns your minikube ip (192.168.49.2by default) todev-kafka-all-broker.dev.svc.cluster.localAre Zookeeper’s install upgrade pods failing with an

Errorstate?If your Zookeeper pods got stuck, please delete the job:

zookeeper zookeeper-operator-post-install-upgrade-2bgjb 0/1 Error 0 3m14s zookeeper zookeeper-operator-post-install-upgrade-9grpk 0/1 Error 0 4m56s zookeeper zookeeper-operator-post-install-upgrade-bttph 0/1 Error 0 6m38s zookeeper zookeeper-operator-post-install-upgrade-bvdgh 0/1 Error 0 3m48s zookeeper zookeeper-operator-post-install-upgrade-gsnd9 0/1 Error 0 2m6s zookeeper zookeeper-operator-post-install-upgrade-jqzd4 0/1 Error 0 2m40s zookeeper zookeeper-operator-post-install-upgrade-n24lg 0/1 Error 0 58s zookeeper zookeeper-operator-post-install-upgrade-nf9jz 0/1 Error 0 4m22s zookeeper zookeeper-operator-post-install-upgrade-t4r8r 0/1 Error 0 5m30s zookeeper zookeeper-operator-post-install-upgrade-vbpcm 0/1 Error 0 6m5s zookeeper zookeeper-operator-post-install-upgrade-vrkx2 0/1 Error 0 92s

kubectl delete job -n zookeeper zookeeper-operator-post-install-upgrade

Uninstall¶

Uninstall Kafka Cluster¶

kubectl delete -n kafka -f https://raw.githubusercontent.com/jay-johnson/koperator/nodeport-with-headless-example/config/samples/simplekafkacluster-with-nodeport.yaml

Uninstall Koperator¶

Note

This will hang if there are any kafkaclusters still in use

helm delete -n kafka kafka-operator

Uninstall Zookeeper Cluster¶

kubectl delete -n zookeeper zookeeperclusters zookeeper

Uninstall Zookeeper Operator¶

Note

This will hang if there are any zookeeperclusters still in use

helm delete -n zookeeper zookeeper-operator

kubectl delete crd zookeeperclusters.zookeeper.pravega.io

Sources¶

For those that want to refer to the official docs, I followed the Install the Kafka Operator guide.